From time to time, we get the question on how can PowerFlex deliver the level of performance and reliability to match that of Fibre Channel fabric. It’s a great question, as in the past there is no doubt that Fibre Channel provided a superior outcome – it was designed for storage traffic!

Back in those days though, this is when typically there was 1GbE Ethernet vs 4GB FC – obviously FC had a major performance advantage back then.

Likewise in terms of protocol, iSCSI was typically using standard 1500 byte MTU, whereas FC uses a 2148 frame. A slight advantage there, but the bigger advantage of FC was the ‘lossless’ credit based system where it was designed to not suddenly cut off traffic like TCP was prone to do when reaching saturation.

These days though TCP as a protocol has continued to evolve, to the point where typically all modern operating system versions handle this much more gracefully through their TCP congestion control mechanisms. Jumbo frames of up to 9000 bytes also reduce any protocol overheads too.

So what about PowerFlex?

PowerFlex (née ScaleIO) has just recently celebrated its 10 year anniversary – but it’s important to note that it was founded around the time that 10GbE had become the new de-facto Ethernet standard in the DC. This was incredibly important to PowerFlex as it was now possible to run storage types of workloads over an Ethernet fabric thanks to this order of magnitude increase in bandwidth. Previously with 1GbE fabrics, it simply could not cut it for serious storage requirements.

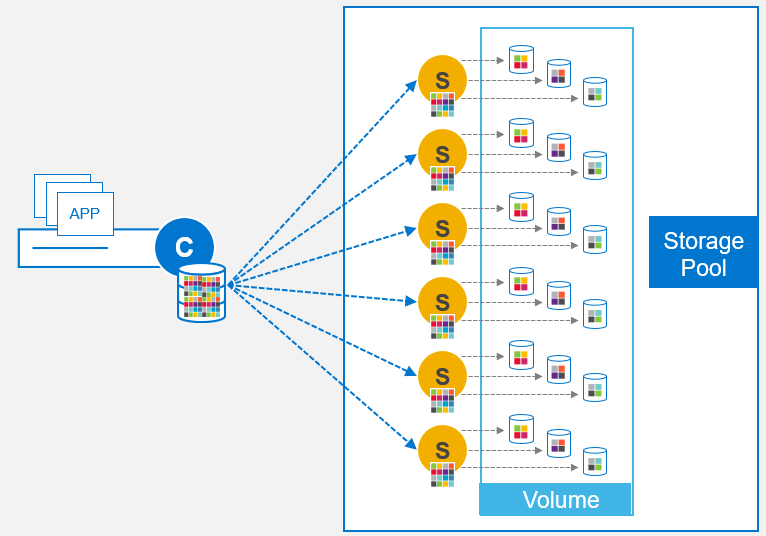

One major attribute of PowerFlex that allows it to avoid congestion in the first place is simply with its scale-out architecture. Every storage / HCI node will provide at least 2x 10/25/100GbE ports dedicated purely to storage traffic. This aggregated bandwidth typically provides far more headroom than a traditional storage array can, therefore it simply avoids congestion through massive available bandwidth. (i.e. the fan-in to fan-out ratio is much lower than you’d normally see in an FC centric system).

The next unique factor that allows it to avoid congestion in the first place is its data layout architecture. Every Volume is broken up into 1MB (medium-grain), or 4KB (fine-grain) units. This means that your host has multiple TCP connections opened to every single storage-node that that Volume is spanned across (typically up to 30 nodes / 300 disks as a best practice) – and given this level of granularity you will never see a single storage node being hit any harder than its peers. It’s actually quite amazing looking at the traffic patterns of enterprise storage workloads; what you’ll see on PowerFlex system is just how even the utilisation is between all of the storage nodes – it’s typically within only a few percentage points of each other.

The reliability equation

The last aspect, and I would argue the most important, is the reliability of the fabric. Everyone has gone through a network outage at some point in time. Normally the FC guys are rolling their eyes at the Network team when this happens – as it simply does not happen on FC fabrics (or very, very rarely).

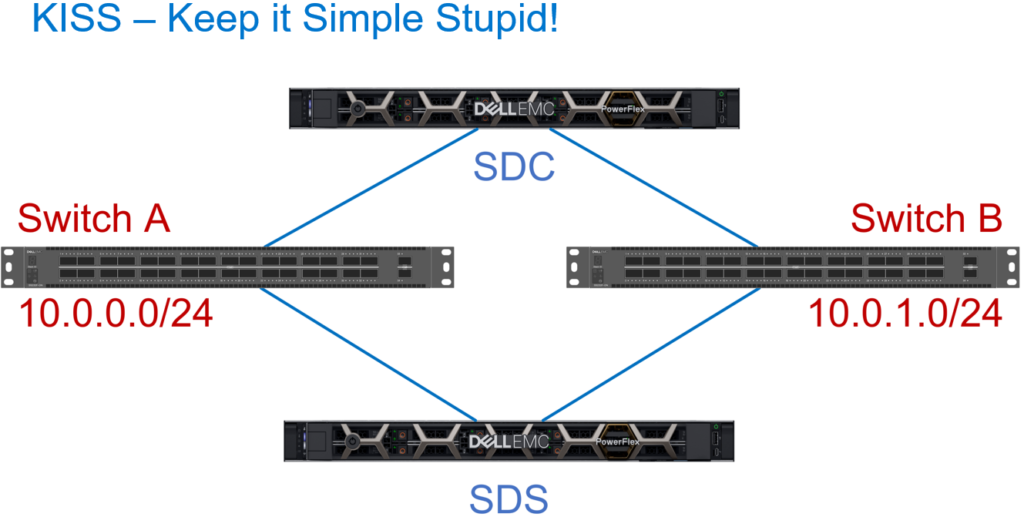

Why is this though? Is it because FC is simply superior in this regards? No it’s not – it really just comes down to the the physical topology design. Fiber channel fabrics since day one have followed a very simple principle: air-gapped with host-based multi-pathing! This is the reason that FC fabrics are incredibly reliable. It’s simple, it’s idiot-proof and it just works.

So how does PowerFlex achieve this same level of reliability? In the simplest sense – “imitation is the most sincere form of flattery”, PowerFlex can do exactly the same thing as Fibre Channel – host based, native-IP multi-pathing, across two independent air-gapped Ethernet fabrics. This is a fundamental capability of the product that has been there since its inception. Built by storage folks, for storage folks!

Data Centre Networking

There are many ways though of course in terms of designing your data centre networking. There are ways that might converge fabrics for cost-saving benefits, and there are other designs that simply replace FC switches with Ethernet switches. A port is a port after all, and once you reach a certain scale you don’t lose any efficiency if you have dedicated switches purely for storage-traffic.

The last thing I will say though is that there is no one best way – every environment is different, and we all have our own preferences based upon our own experiences. This is where PowerFlex offers choice in this regards – “you do you” – if you prefer the simple air-gapped design, let’s do it. If you would like to add a dash of bonding to the equation – go for it. If you have mastered your network and don’t want the air-gap – that’s entirely up to you.

Performance, performance, performance.

Okay one more last thing – PowerFlex with 25/100GbE networking delivers absolutely mind-blowing performance across 100% of the dataset, for 100% random workloads, millions of IOPS for small block I/O with consistent ~200-300usec latencies, and for large-block I/O where the bottleneck is the available network bandwidth. Seeing is believing, and this is a one product where I always like to say – Bring it on!