By Simon Stevens, PowerFlex Engineering Technologist, EMEA. June 2021.

In which we discuss how two new features introduced with Dell EMC PowerFlex 3.6 Give Customers Improved Network Resiliency.

Nearly every customer discussion that involves the Dell EMC PowerFlex family usually covers architecture, because “Architecture Always Matters”. There will be the inevitable mention of the highly available and super-resilient architecture of PowerFlex that enables it to keep delivering storage to the most demanding of applications, even when things fail within the infrastructure. Indeed, the ability of PowerFlex to instantly detect faults and then rebuild itself quickly, whilst simultaneously ensuring that there is zero or minimal impact to application workloads, was touched upon a in previous blog posted by one of my Dell colleagues, who used the phrase “Grace Under Pressure” to explain how PowerFlex handles such situations. Customers just love having applications that reside on infrastructure that is reliable and gives predictable performance even when disks and node hardware fails.

However, the ability to provide a reliable, predictable, highly-performant software-defined storage service needs more than just the ability to gracefully deal with the inevitable occasional stumble from the x86 hardware platform that you have chosen to build the software on top of. Software defined storage intrinsically relies upon IP networking to provide the connectivity required to pair the storage providers with application workload clients. Hence to deliver a truly resilient software-defined storage platform, one equally needs a truly resilient network to provide the glue between the compute components.

Of course, we could devote a whole series of blogs on discussing how best to design highly available, flexible and scalable IP networks, but we will leave those discussions for another day! Suffice to say, that there are basics that are generally accepted for storage traffic across IP networks. The general wisdom that prevails comes from the long and successful history of FC SAN Storage – have at least two ‘networks’, have at least two switches within each network for n+1 availability, connect each host to both networks and ensure that hosts have multiple NIC cards. On top of this, we can then use software tools to make things even better, both within the switches, the hosts and the storage software layer itself. Hence the proliferation of options such as trunking, teaming, LAGs and so on at the network layer. But networking issues can still happen, even with the best designed & correctly implemented IP networks. So once again, having “Grace Under Pressure” and having the ability to handle issues that might be caused by faulty networking – but must ultimately be dealt with by PowerFlex – cannot be understated.

PowerFlex defends itself against IP networking gremlins by calling on an array of features within its armoury to help deal with the inevitable network service issue. Its built-in native multi-pathing ensures that IO requests across a failed path get re-directed instantly down a surviving path, whilst the MDM cluster regularly checks that IP communication between all SDS and MDM components is working as expected and if not, the MDM will “disconnect” failed SDS nodes & cause a rebuild to occur in order to maintain & preserve storage integrity. It also goes without saying that once networking issues have been resolved, the failed nodes are easily & safely added back into a PowerFlex cluster. But here in the PowerFlex Team, we are not allowed to rest on our laurels, because we listen to our customers when they tell us about issues that they have experienced within their environments. Indeed, we take our customer feedback seriously and we use it to drive ongoing product improvements.

True to this philosophy, two Network Resiliency Improvements have been added into PowerFlex 3.6, each of which came from our customer base. Both improvements will ensure that our customers will now be better protected and better able to deal with network issues that are outside the direct control of PowerFlex.

Feature #1 – SDS Proxy

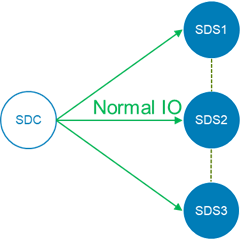

This feature has been introduced to give improved network resiliency for customers who utilise two-layer (or disaggregated) PowerFlex systems. In these, the SDC client is external to the PowerFlex Storage cluster. During normal operation, if a volume is mapped to a SDC, then the SDC is communicating with all of the SDS nodes within the Protection Domain that the volume is provisioned across – remember that with PowerFlex, a volume will be evenly spread out across all disks that are managed by each SDS in the Storage Pool. The SDC will be communicating to a number the SDS nodes in parallel. This is shown in our example below, where we have 3 SDS nodes and the SDC is sending IO to all three of them in parallel.

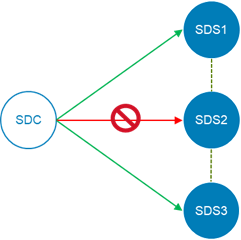

Now, let us consider what happens in the scenario where there can be a network path disconnection/failure between an SDC and an SDS that is not a general network failure of the cluster. In such a situation, there is a temporary network issue between our SDC client and one of the SDS nodes:

In this scenario, because all of the SDS nodes are visible to each other, a rebuild of the SDS cluster will not get triggered because, from the cluster point of view, there is no issue – everything is good within the SDS cluster!

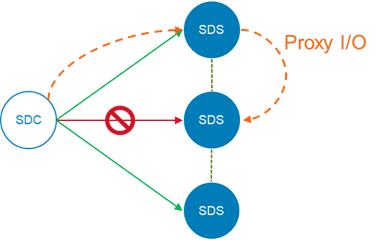

With previous versions of PowerFlex, such a scenario would cause any IO on the SDC in question to fail until the temporary network issue was fixed. But with PowerFlex 3.6, if a path to one SDS fails, the SDC will retry I/Os using another SDS in the Protection Domain as a proxy SDS for the IO requests and will then use that proxy SDS until the disconnected network link issue is resolved. This means that IOs from the SDC will continue despite the external network issue, giving us improved network resiliency.

FIGURE 3 – THE SDS PROXY ENSURES THAT ALL IO REQUESTS ARE STILL SERVED FROM THE SDC

Obviously, if such an issue happens then it needs to be resolved, so alerts will be generated by PowerFlex to highlight that there are disconnected links between the SDC and the SDS. The alerts are cleared once the network problem is fixed. This feature is enabled by default once customers have upgraded to PowerFlex 3.6, so existing customers will not need to do a thing to start getting the benefits of the SDS Proxy feature. It is also worth noting that this will only work for complete and continuous network disconnections. For situations where port flapping within a PowerFlex system causes problems, our second new feature will be of use here.

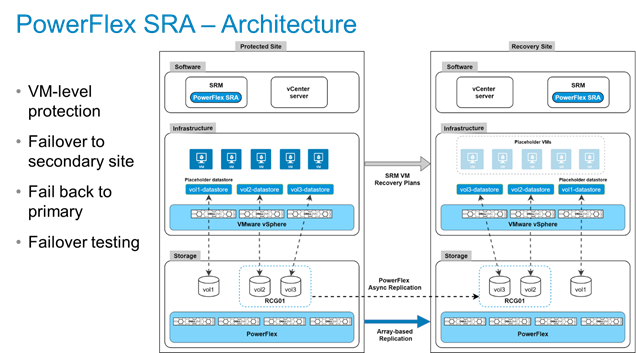

Feature #2 – Proactive Network Disqualification

A single (or more) flapping NIC port(s) or a socket experiencing frequent disconnects (aka port flapping), can really cause system performance issues with any system that depends on connection-oriented communications, such as PowerFlex’s fully distributed architecture. These issues can cause a complete system performance hit because they can hit any component within the system. One flapping port can affect the entire system. With any disconnection case, a user will experience a 2 second network hiccup due to the way that TCPP works – so if a port is flapping, the results can be horrendous from a performance point of view.

The great news is that PowerFlex 3.6 will now detect this and proactively disqualifies any flapping paths, disqualifying such ports to prevent general disruption across the system. Again, The users will be alerted when this happens, so that they can investigate and resolve the issue. Once resolved, PowerFlex will automatically test the disqualified path before adding it back into the system, but a single disconnection from the port will once again disqualify the path.

The net result of this improvement can clearly be seen from the following testing – on the left is a measurement of performance observed with a port flapping event running under PowerFlex 3.5.x code, while on the right is the same test repeated once the system had been upgraded to PowerFlex 3.6.

Taken together, these two new features give PowerFlex 3.6 customers even more network resilience. “Grace Under Pressure”… even when it is the network that is applying the pressure!

Hi Simon, is it public available on how Powerflex detects port flapping issues? or is it micro-flapping? thresholds?

We see path disqualifications, but no issues in the network logs.

Hi Frank, it looks like we do not have any public-facing documentation that describes this in depth. Glad to hear that you see the path disqualification error messages in the Alert Logs, these should “clear up” once the issue is resolved. I have heard of issues with network hardware where the hardware has “had issues” but not reported it because timers on the network switches that manage such physical link failures – things as link down event detection and handling – have been modified by the network admins to a value that is “longer” than you might ordinarily expect, but with the end result that micro-flapping events on the switches are not being reported/picked up in the switch logs! If you are repeatedly seeing path disqualifications – but nothing in your network switch logs – then I would strongly recommend raising an SR to get Support to assist you in resolving whatever is causing this.