By Simon Stevens, Dell PowerFlex Engineering Technologist, 30th June 2023.

Last week, I had the pleasure of being able to attend SUSECON23, which was being held in Munich and was the first “in-person” conference that SUSE had run post pandemic. I was lucky enough to be invited to speak at one of the break-out sessions, alongside Gerson Guevara, who works in the Technical Alliances Team at SUSE. It is always a pleasure to meet up with interesting people that you can learn things from, and Gerson was definitely a case in point! Certainly he was a great co-pilot to present with.

Together, we jointly presented a session on “Joint Initiatives & Solutions Between SUSE and Dell PowerFlex” to a packed room. We discussed the joint Dell PowerFlex & SUSE Rancher solutions that we have available today. We also gave a tantalising glimpse of what the future might hold, by discussing the early results from a proof of concept that our Engineering teams have been working on together for a few months.

What I find very interesting is that a lot of our joint customers remain blissfully unaware of the amount of collaborative work that the Dell PowerFlex & SUSE teams have done together over the years, so I thought it best to give a quick overview on what is already out there and available today.

SUSE and Dell Technologies already have a deep partnership, one that has been built over a 20+ year period. Our joint solutions can be found in sensors and in machines, they exist in the cloud, on-premise and at the edge, all of them built with a strong open source backbone. As such, Dell & SUSE are addressing the operational and security challenges of managing multiple Kubernetes clusters, whilst also providing DevOps teams with integrated tools for running containerized workloads. What many people outside of Dell might not be aware of is that a number of SUSE products & tool sets are used by Dell developers when creating the next-generation of Dell products and solutions.

When one looks at the wide range of Kubernetes Management platforms that are available, SUSE are certainly amongst the leaders in that market today. SUSE completed its acquisition of Rancher Labs back in December 2020, by doing so, SUSE was able to bring together multiple technologies to help organizations. SUSE Rancher is a free, 100% open-source Kubernetes management platform that simplifies cluster installation and operations, whether they are on-premises, in the cloud or at the edge, giving DevOps teams the freedom to build and run containerized applications anywhere. Rancher also supports all the major public cloud distributions, including EKS, AKS, GKE and K3s at Edge. It provides simple, consistent cluster operations, including provisioning, version management, visibility and diagnostics, monitoring and alerting, and centralized audit. Rancher itself is free and has a community support model, so for customers that absolutely need an enterprise-level of support, they can opt for Rancher Prime, which is the model that includes full enterprise support and access to SUSE’s trusted private container registries.

Current Joint Dell PowerFlex-SUSE Rancher Solutions

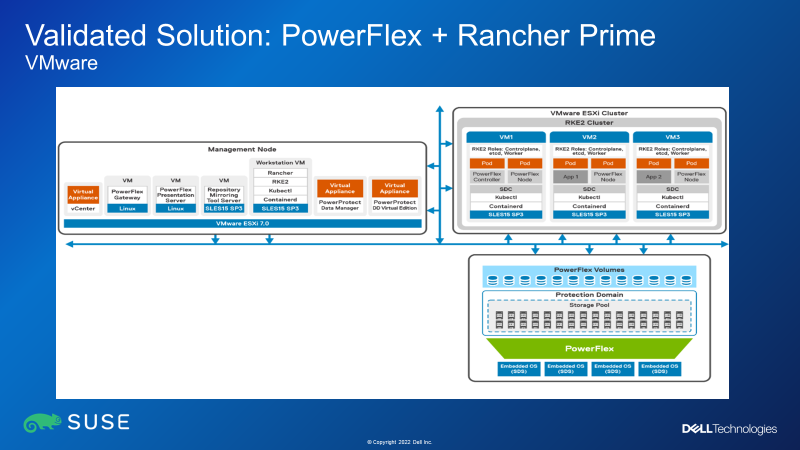

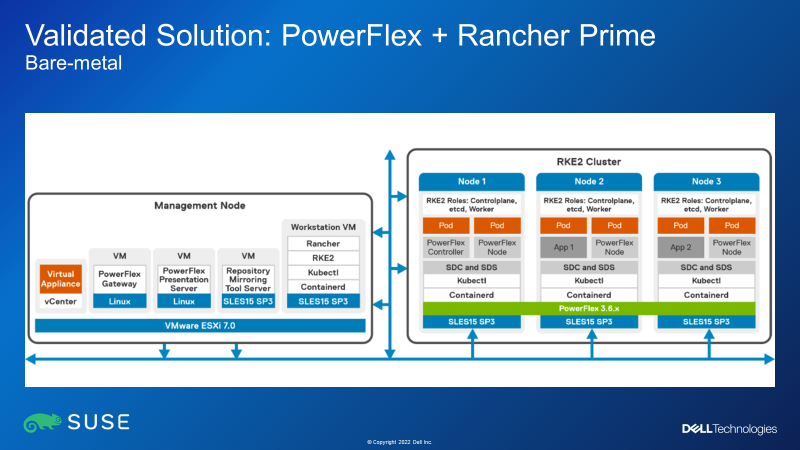

Back to my earlier point about the collaboration that exists between the SUSE and Dell PowerFlex Solutions Teams. We have been working together on joint solutions for several years now and we are constantly updating our Whitepapers to ensure that remain up to date. To simplify things we have consolidated several white papers into one, so that it not only describes how to deploy SUSE Rancher clusters with PowerFlex, but also how to then protect those systems using Dell PowerProtect Data Manager. The Whitepaper is available on Dell Infohub and you can download it from here . It describes how to deploy Rancher in virtual environments, running on top of VMware ESXi, as well as deploying Rancher running on bare-Metal nodes. Let me quickly run you through the various solutions that are detailed in the whitepaper:

As can be seen from Figure 2 above, the RKE2 cluster is running in a Two-layer PowerFlex deployment architecture – that is to say, the PowerFlex storage resides on separate nodes than the compute nodes. This separation of storage and compute means that this architecture lends itself really well to Kubernetes environments where there still tends to be a massive disparity between the number of compute nodes needed versus the amount of persistent storage needed. PowerFlex can provide a consolidated software-defined storage infrastructure – think of scenarios where there are lots of clusters, running a mixture of both Kubernetes and VMware workloads, and where you want a shared storage platform to simplify storage operations across all workstreams.

Figure 2 also shows that the RKE2 clusters are deployed on top of VMware ESXi, in order to obtain the benefits of running each of the RKE2 nodes as VMs.

The whitepaper also discusses deployment of Rancher clusters running on bare-metal HCI nodes – that is to say, deploying the Rancher Cluster onto Nodes that have SSDs in them. The Whitepaper talks through an option where first SUSE SLES15 SP is installed, then PowerFlex is installed to use the SSDs in the nodes to create a storage cluster. Then finally, the RKE2 cluster gets deployed, using the PowerFlex storage as the storage class for Persistent Volume Claims. It is worth noting that “HCI-on-Bare-metal” PowerFlex deployments are usually done using PowerFlex Custom Nodes or outside of PowerFlex Manager control, as we currently do not have a PowerFlex Manager template that deploys either SLES15 SP3 or RKE2 !

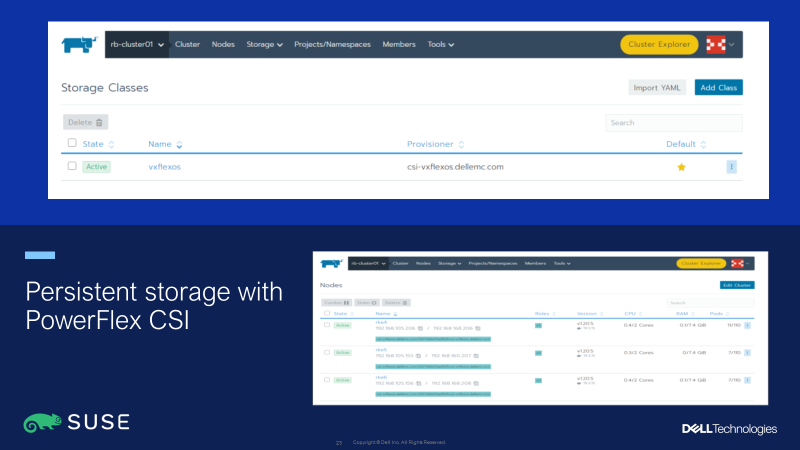

Kubernetes clusters that want to access persistent storage resources need to utilise API calls via the CSI driver for the storage platform being used. With SUSE Rancher, it is easy to deploy the PowerFlex CSI Driver, as this is available to be installed via a single-click from the SUSE Rancher Marketplace. Alternatively, the latest CSI driver is also available directly from the Dell CSM Github: https://dell.github.io/csm-docs/docs/csidriver/

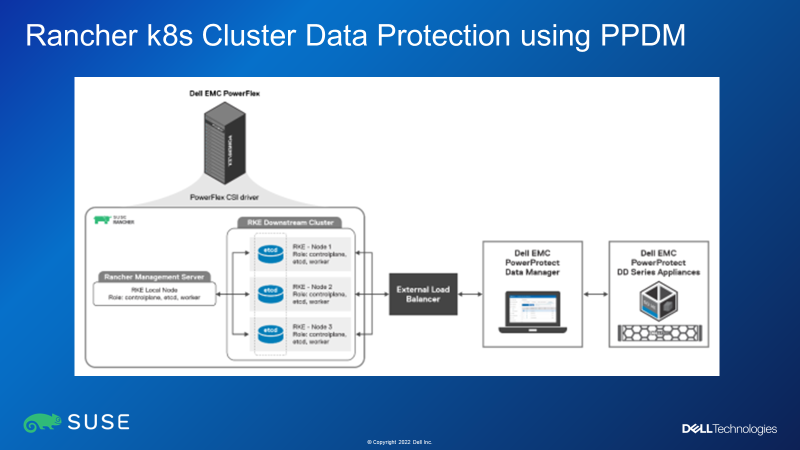

Finally, the whitepaper also discusses how to integrate SUSE Rancher-managed Kubernetes clusters with Dell PowerProtect Data Manager (PPDM) for data protection in one of two ways: either by directly connecting to an RKE2 downstream single node with controlplane and etcd roles, or through a load balancer, when there are multiple RKE2 nodes with controlplane and etcd roles in an RKE2 downstream cluster.

Hence you can see that Dell & SUSE have worked incredibly closely to create a number of solutions, which not only give customers a choice in how they deploy their RKE2 clusters with PowerFlex (either with or without VMware, using wither two-layer or hyper-converged options) but also shows how such solutions can be fully protected & restored using Dell DDPM.

A Glimpse into the Future…?

Back to the breakout session at SUSECON, and everyone in the room was excited to discover what the the “hush-hush” news from the Proof-of-Concept was all about! Suffice to say that what we were presenting was something that is not on any product roadmaps, nor has it been committed to by either company. What we were able to show was an 8 minute “summary video” that was created in our joint PoC lab environment. The video explained how we were able to use PowerFlex as a storage class within SUSE Harvester HCI clusters. For those not in the know – SUSE Harvester provides small kubernetes clusters for ROBO, Near-Edge and Edge use cases. Even though Harvester can make use of the Longhorn storage that resides in the Harvester cluster nodes, we are hearing of use cases where hundreds of Harvester clusters all want to access a single shared-storage platform. Does that ring any bells with my earlier observations above? Anyway, suffice to say, such solutions are still being looked at as potential use cases – in my opinion, it is not one that I would have normally associated with edge use-cases, but having seen what was happening myself at SUSECON23 last week, I am happy to stand corrected and will continue to monitor this space going forwards!

For the rest of SUSECON23, I shared my time between attending the various keynote sessions, manning the Dell booth and obviously talking with partners and other conference attendees. There was an amount of interest in what Dell are doing in and around the Container space, so it was good to be manning a booth from which we were able to show our Dell CSM app-mobility module makes it simple to migrate kubernetes applications between multiple kubernetes clusters.

This was also my first time that I had attended an ‘in-person’ conference since before the pandemic, so it was genuinely fantastic to meet & be able to talk to people again. Sometimes you do not realise just much you have missed things until you do them again! However, I was also reminded of something that I had not missed when it came to finally saying “Auf Wiedersehen” to Munich and embark on my journey back to the UK – Thanks to the incredible storms that swept Germany last Thursday, we eventually took off from Munich 4 hours late, on what I was reliably informed was the last flight that the German ATC allowed to take off before they closed off German Airspace for the night. But when all is said and done….. Here’s to SUSECON24!!

Awesome stuff Simon!

Dear Simon,

I came across your “Glimpse into the Future” and we are currently dealing with these topics. We didn’t even consider this combination because we didn’t think it was possible due to Longhorn. However, being able to use a Harvester cluster with virtualized workloads with a Powerflex instead of Longhorn would be very interesting for us.

Has the idea been further developed or has it been abandoned? Or did the PoC perhaps lead to the current limited solution of Harvester with the CSI driver (https://docs.harvesterhci.io/v1.2/advanced/csidriver/)?

Thank you very much!